Tableau Conference 2025 | Know Before You Go

If you’re a data enthusiast, analytics professional, or just someone curious about Tableau’s latest innovations, the Tableau Conference 2025 is your...

Recently, I was assisting an analytics team with implementing a Snowflake cloud data warehouse. This particular team did not have an existing data warehouse, instead they were extracting CSV files from their source systems to handle reporting in Excel. Our plan was to load these CSV files to tables within Snowflake and build reporting views that would connect to Tableau.

This team was small and had many responsibilities, so our ultimate goal was to automate each step of this process so their data source creation and reporting would require minimal maintenance. On previous projects, I had utilized other cloud ELT tools to handle the loading and transformations. These tools usually have scheduling capabilities that make automating this process very simple. The team I was working with on this project, however, wanted to keep their data processing within the Snowflake instance.

Snowflake created Tasks as a tool to help users execute SQL statements on a consistent schedule. They can be created with dependencies, so there are tasks that will run after a predecessor task has completed successfully. Tasks run using a user specified warehouse and can be scheduled to run at specific intervals or using CRON notation. CRON notation allows you to be very specific about the dates and time of task execution.

Snowflake recommends creating a custom role for task ownership. This role should have the CREATE TASK and EXECUTE TASK privileges.

Here is an example of using Tasks to load data to tables and create a reporting view with some sample data sets.

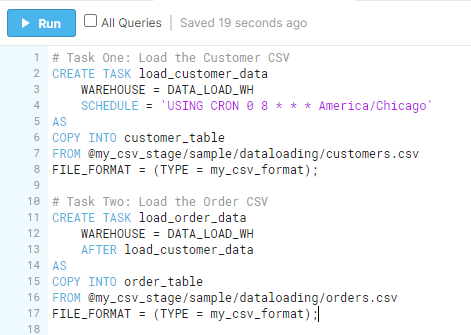

In the Task One example, you can see that I used the CREATE TASK command to give a name, specify a warehouse, and schedule an execution time for the first task. The CRON notation here means that this task will run at 8am Central every single day. This task will execute the entire SQL statement that appears after the “AS”. This SQL statement will copy the contents of the csv file in the file path into the customer_table.

For Task Two, I followed a very similar process. The main difference you will see is that I specified that this task should run AFTER the first task “load_customer_data”. This establishes the Parent and Dependent tasks. The second task will begin to run after the first task completes successfully.

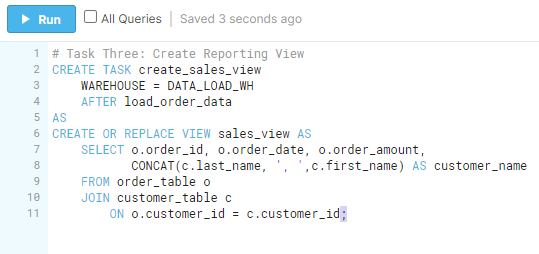

For Task Three, we changed the AFTER statement so that this task will run after the order data has been loaded. This means that once all of our data has been loaded to tables, this SQL statement will join them together to create a view of the sales that can be used for reporting.

Once these tasks have been created you can run SHOW TASKS; to view them and if changes are necessary you can use ALTER TASK to do so. You will notice that the state of the tasks is listed as “suspended”. Snowflake requires the user to “Resume” the task in order for the SQL statements to run on the schedule specified.

![]()

This is a System Function that allows you to resume all dependent tasks for the specified task rather than executing one for each of them.

![]()

You will use this statement syntax to resume the Parent Task. You can replace the RESUME with SUSPEND if you would like the task to stop running.

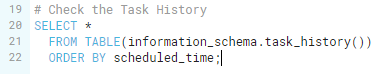

Unfortunately at the moment, there is no way to be notified if a task completes successfully or unsuccessfully. However, Snowflake stores the task history within the Information schema so you can query that table to check if and when the tasks have completed running.

Using Tasks, you are not only able to accomplish the full ELT process without using other tools, you can automate this process to run on your desired schedule.

-2.gif)

If you’re a data enthusiast, analytics professional, or just someone curious about Tableau’s latest innovations, the Tableau Conference 2025 is your...

Tableau Plus is the new premium offering from Tableau, a leading data visualization and business intelligence platform. It builds upon the...

If you've spent any time working with Tableau, you've likely encountered the dreaded "Cannot Mix Aggregate and Non-Aggregate Arguments" error. It's a...